From the « Trust Place » theory to actual code. How to give hands to AI without compromising security.

In my previous article, « Actual Web is Dead, Welcome to the Trust Place« , I described a near future where the static web collapses in favor of fluid interactions between autonomous agents. It is a seductive vision, but it poses an immediate, brutal question to every Developer and CTO: Where do we start?

Theory is cheap. To make this vision alive, we must build the technical bridges that allow an AI to step out of its chatbox and interact with our real systems. This bridge has a name: the Model Context Protocol (MCP).

Today, I don’t just want to talk about it. I want to give you the keys. I have decided to open-source the code of my experimental server, ovochain-mcp-educational, so you can touch the reality of « Agentic Piloting. »

This is not a production core for the Ovochain Trust Place, but a robust educational blueprint. Here is how I architected it.

The Stack: Why FastAPI and Async?

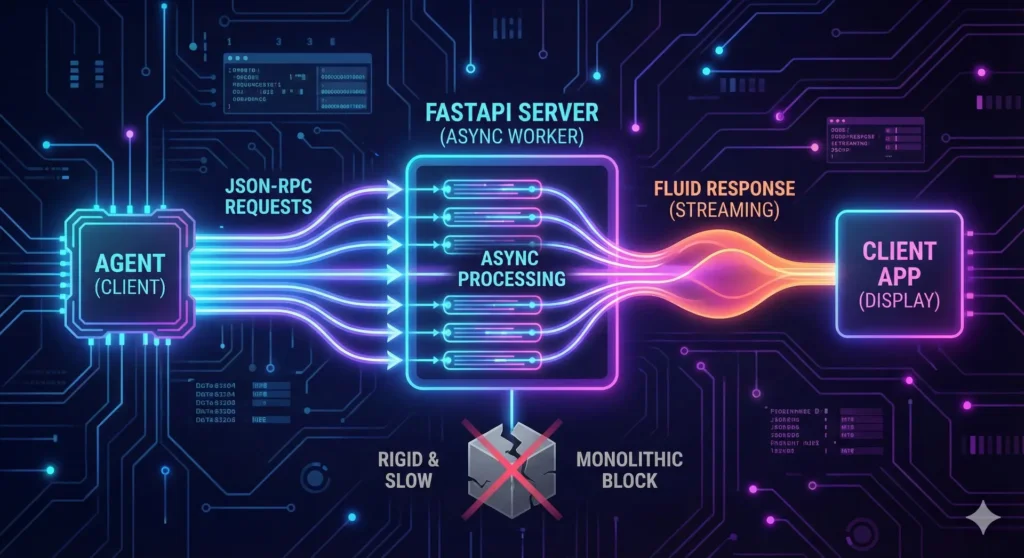

Connecting a probabilistic LLM (like Claude or Gemini) to a deterministic system (your server) requires architectural rigor. I chose Python and FastAPI for a specific reason: Latency Management.

The MCP protocol relies on constant JSON-RPC exchanges, often via SSE (Server-Sent Events). The AI « thinks, » sends a request, waits, and reacts.

- Native AsyncIO: I utilize FastAPI’s asynchronous capabilities to handle these streams without ever blocking the main thread. This ensures the Agent remains responsive, even if it bombards the server with read requests.

- Pydantic Validation: MCP is strict. Every message is validated by Pydantic models. If the AI « hallucinates » a parameter, the request is rejected before it even reaches the business logic, protecting the runtime integrity.

Giving « Hands »: The Git and Filesystem Modules

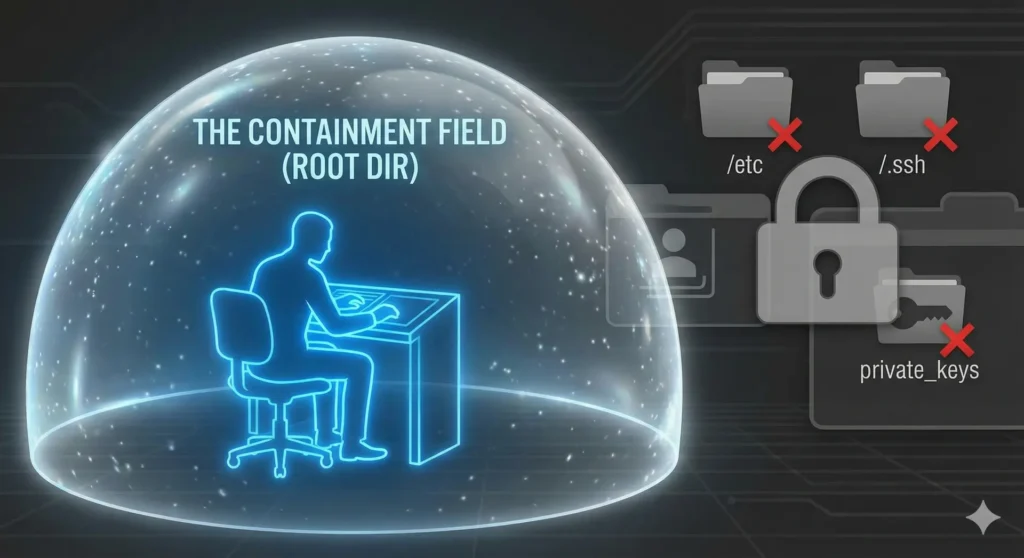

The naive implementation would be to expose a shell to the AI. That is the worst possible security mistake. In ovochain-mcp-educational, the Agent does not have system access; it has access to tools that I have specifically architected.

The Filesystem Module (The Eyes)

By default, an AI is blind. I implemented tools like fs_search (a structured grep) and fs_find_file (globbing) to allow it to « understand » the context of a project before proposing a modification. It can see the code structure, not just guess it.

The Git Module (The Memory)

The Agent doesn’t just edit files; it manages the code lifecycle. It can create a branch (feat/login-fix), commit changes with a signed message, and prepare the ground for a merge. It transforms from a text generator into a true project contributor.

SecurityGuard: The Firewall of Intelligence

This is where the difference between a gadget and infrastructure lies. How do you prevent the Agent from executing a rm -rf /? The server integrates a security middleware, the SecurityGuard.

The Architecture Lesson: From Local to Swarm

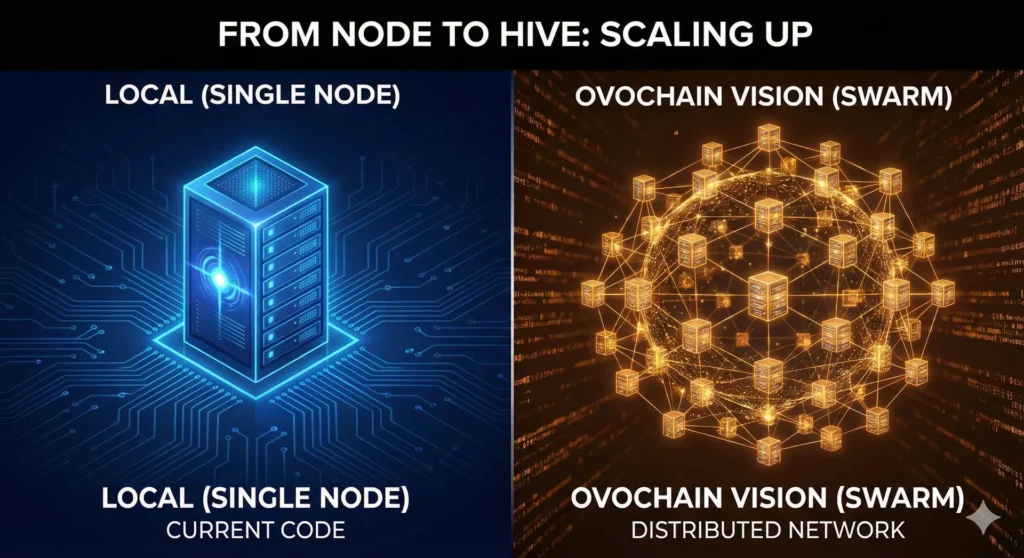

This code is designed to run on a local machine (single-node). It is perfect for learning. But what happens if the AI requests a 30-minute compilation or a massive static analysis on a monorepo?

In a local architecture, you saturate quickly. This is where the Ovochain Vision comes in. For educational purposes, it is vital to understand that to scale this approach, the solution is not to stack threads on a single server, but to distribute the computation.

ovochain-mcp-educational runs locally (left), the true Ovochain infrastructure (right) leverages a distributed Swarm architecture to offload heavy computation while keeping the Agent fluid.In our production infrastructures, we separate:

- The Intent (Async): The MCP server that receives the AI’s command.

- The Execution (Swarm): A swarm of distributed processes that perform heavy lifting on dedicated nodes.

This allows the Agent’s interface to remain fluid while leveraging the brute force of the cloud for execution.

Conclusion: Towards the Trust Place

This server, ovochain-mcp-educational, solves the Capability problem (the AI can act). But the Identity problem remains ( Who is acting?).

If an Agent modifies your code, who is responsible? In the professional world, logs are not enough. We need Proofs.

This is what we are building with the Trust Place: a layer where every Agent interaction is signed by an Identity Token and validated by consensus, turning every operation into an irrefutable digital contract.

Ovochain is building the infrastructure that will allow your agents to pilot your assets and interact with external agents safely tomorrow.

Remember this for the future of the Web: If the Agent pilots the machine, Ovochain pilots their trust.

👉 The Code is available on GitHub: https://github.com/w-famy/ovochain-mcp-educational

Clone it, break it, build with it.

No responses yet